Abstract

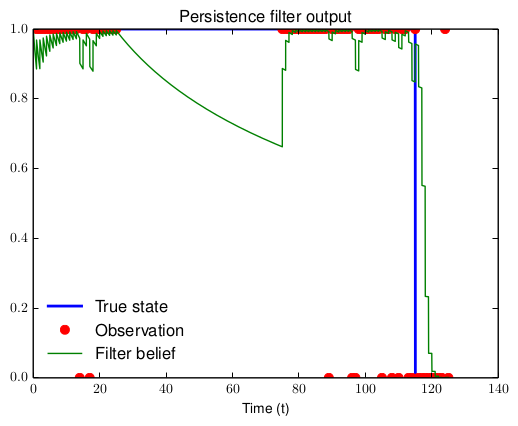

The feature-based graphical approach to robotic mapping provides a representationally rich and computationally efficient framework for an autonomous agent to learn a model of its environment. However, this formulation does not naturally support long-term autonomy because it lacks a notion of environmental change. In reality, “everything changes and nothing stands still,” and any mapping and localization system that aims to support truly persistent autonomy must be similarly adaptive. To that end, in this paper we propose a novel feature-based model of environmental evolution over time. Our approach is based upon the development of an expressive probabilistic generative feature persistence model that describes the survival of abstract semi-static environmental features over time. We show that this model admits a recursive Bayesian estimator, the persistence filter, that provides an exact online method for computing, at each moment in time, an explicit Bayesian belief over the persistence of each feature in the environment. By incorporating this feature persistence estimation into current state-of-the-art graphical mapping techniques, we obtain a flexible, computationally efficient, and information-theoretically rigorous framework for lifelong environmental modeling in an ever-changing world.